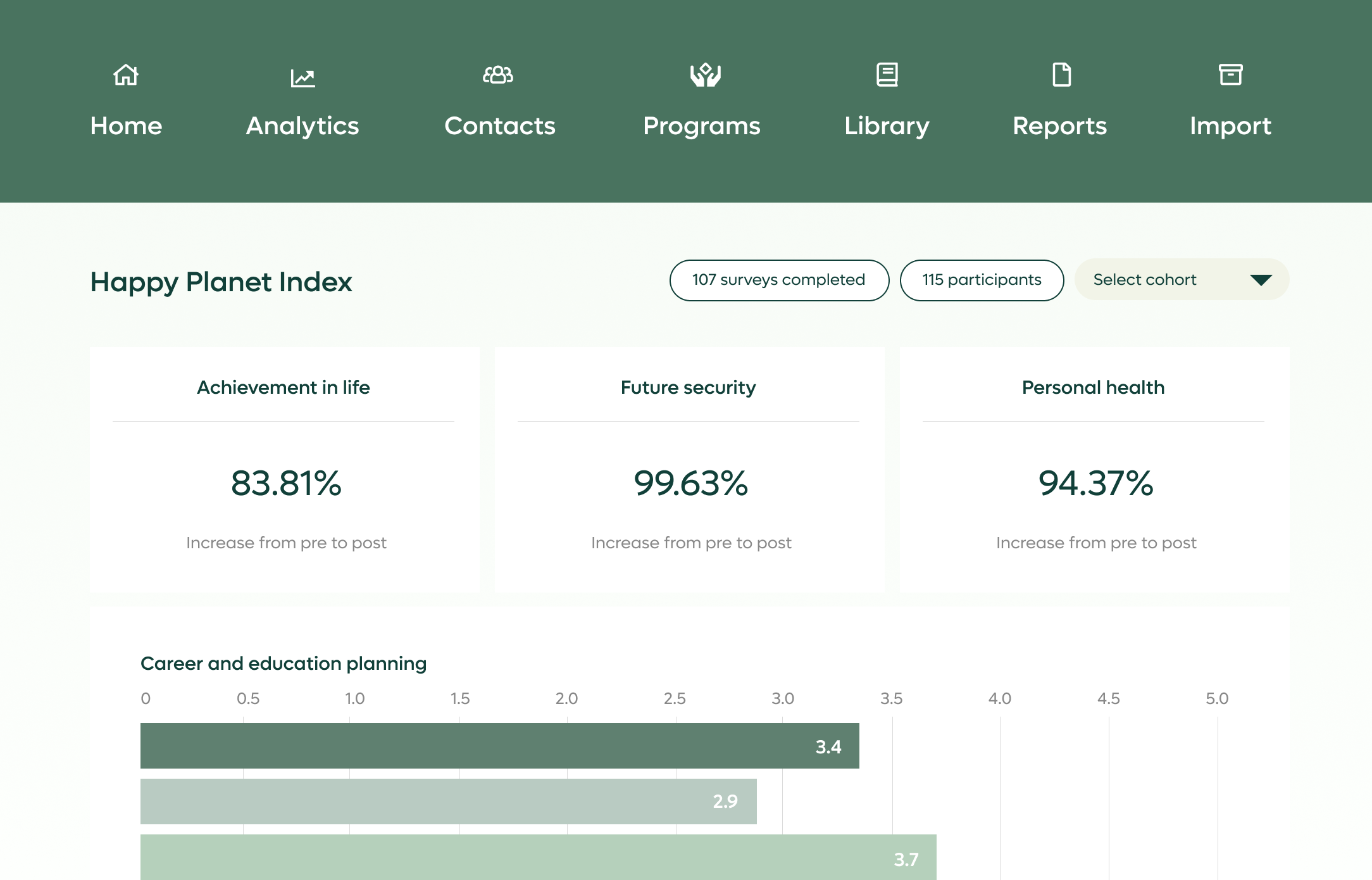

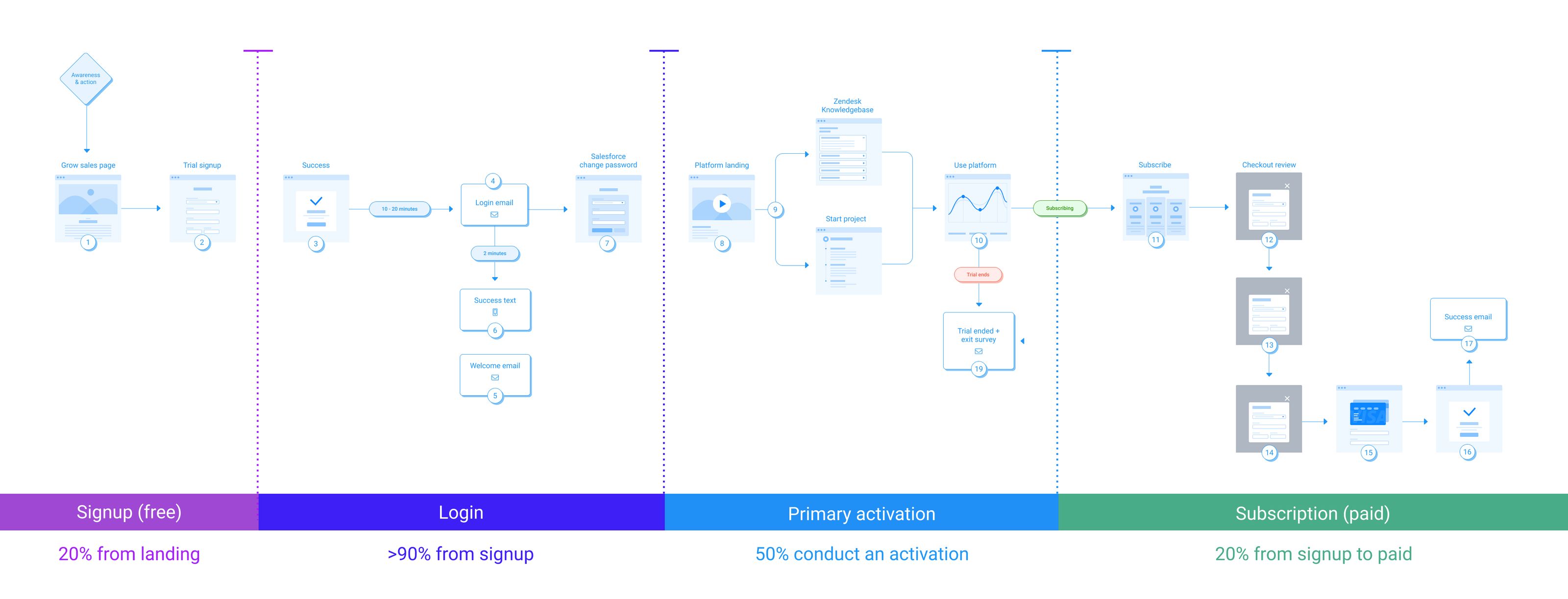

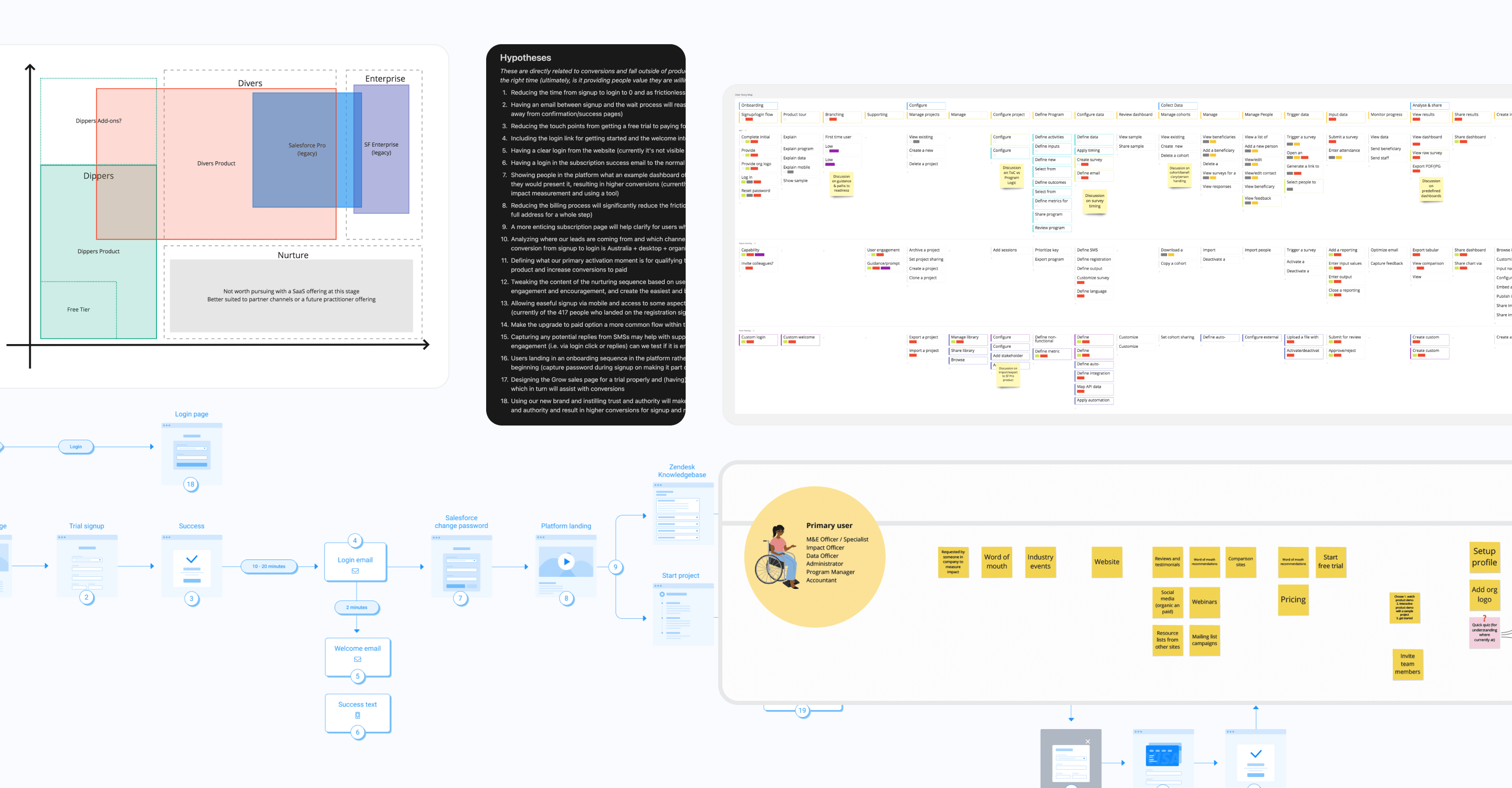

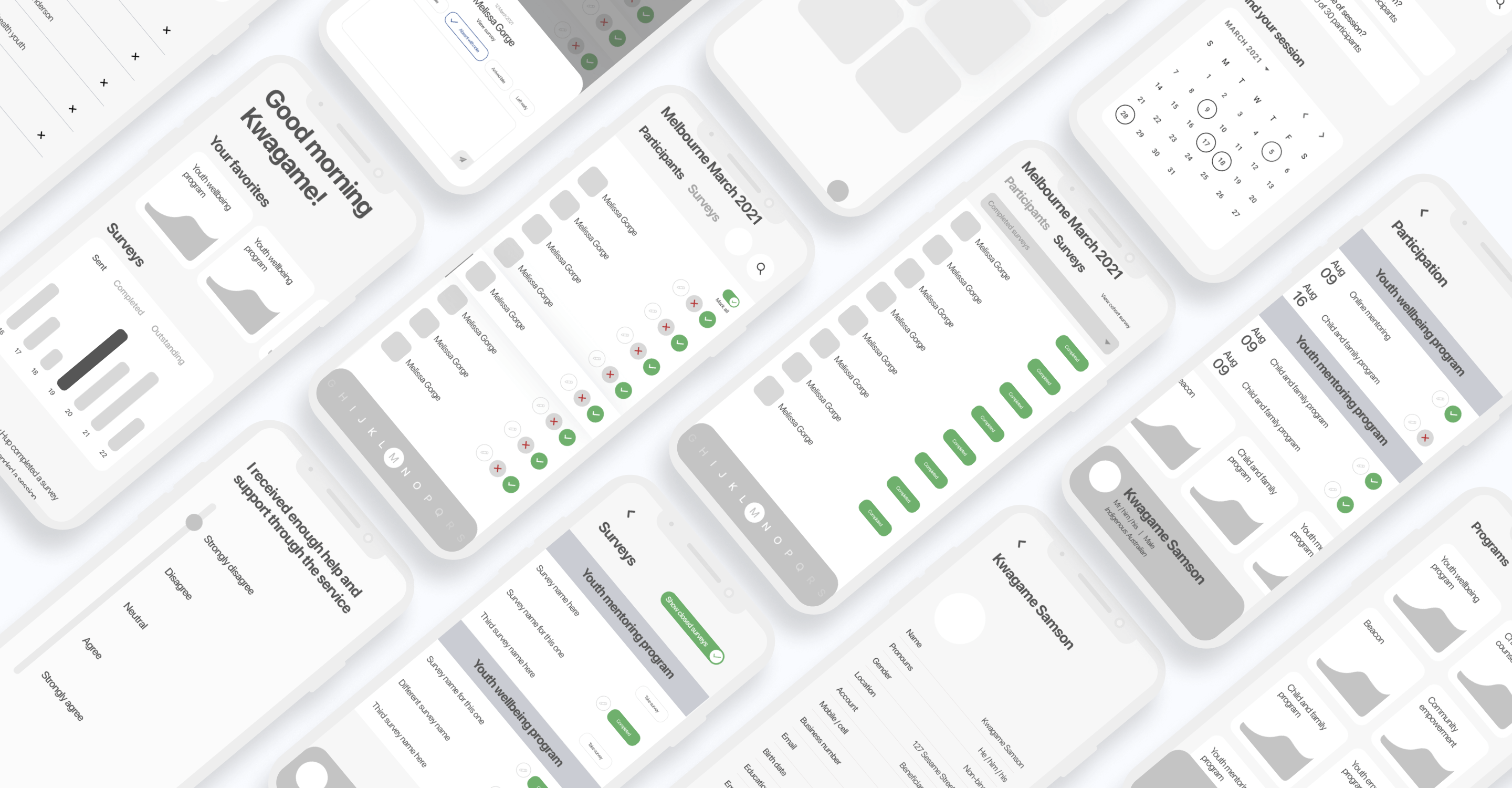

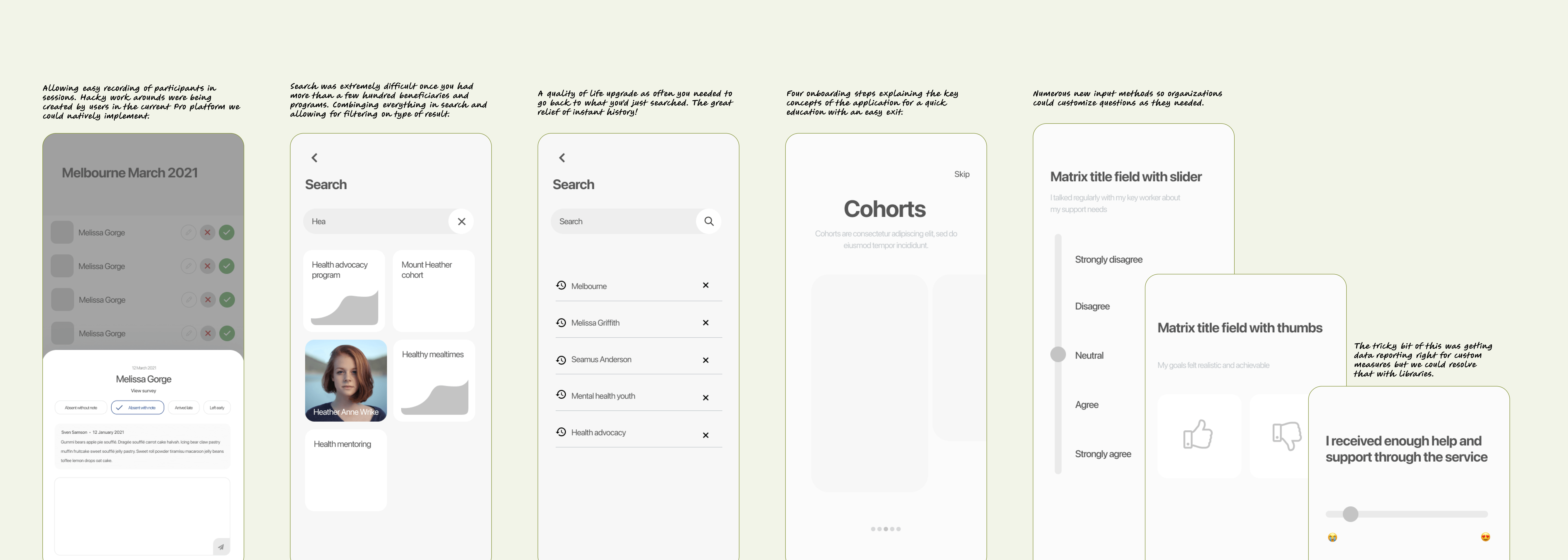

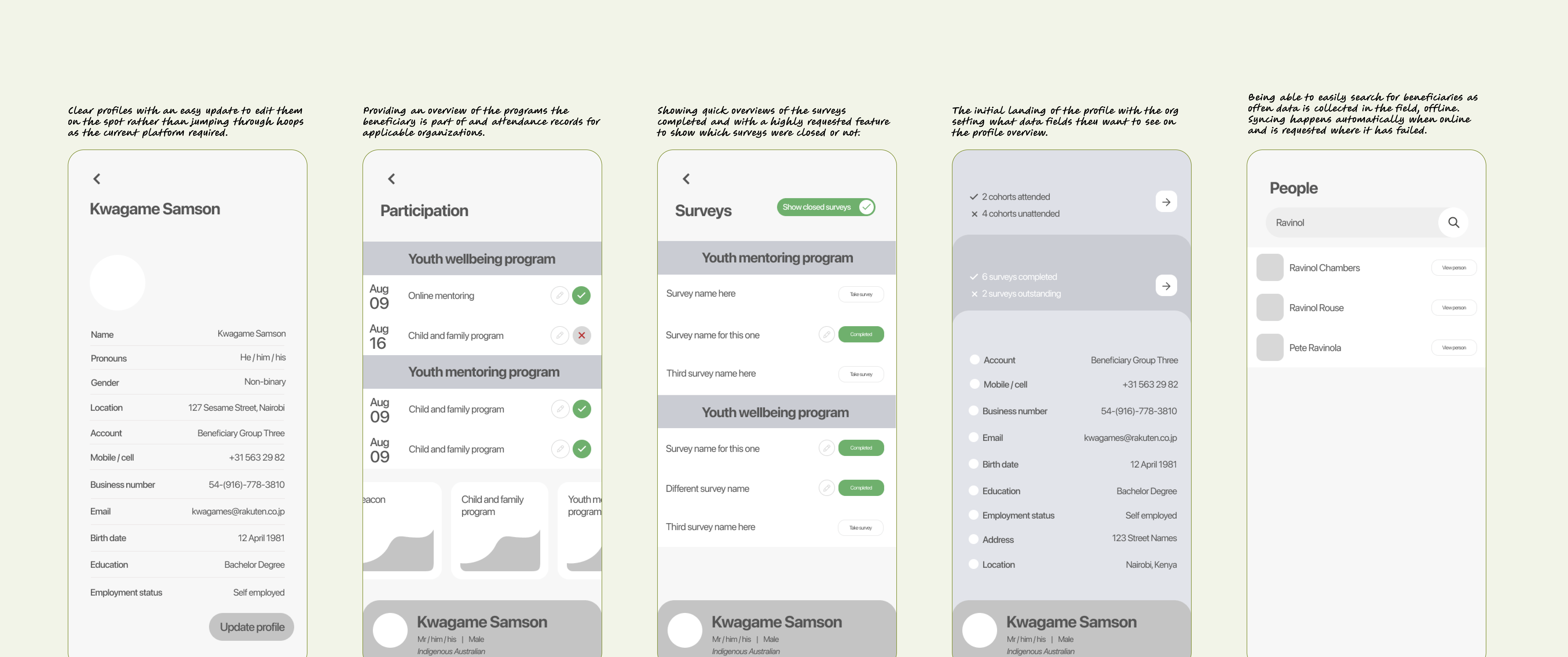

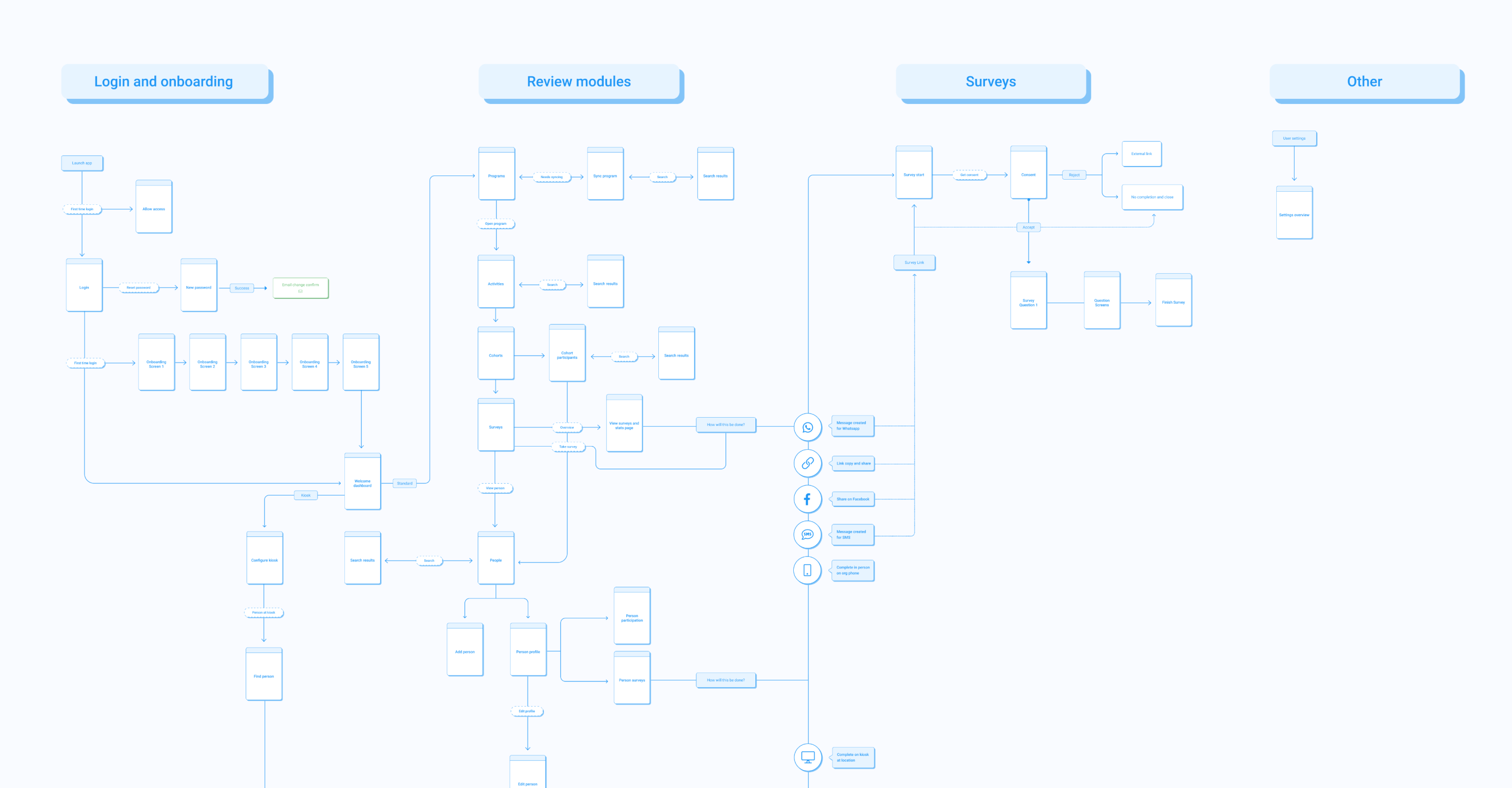

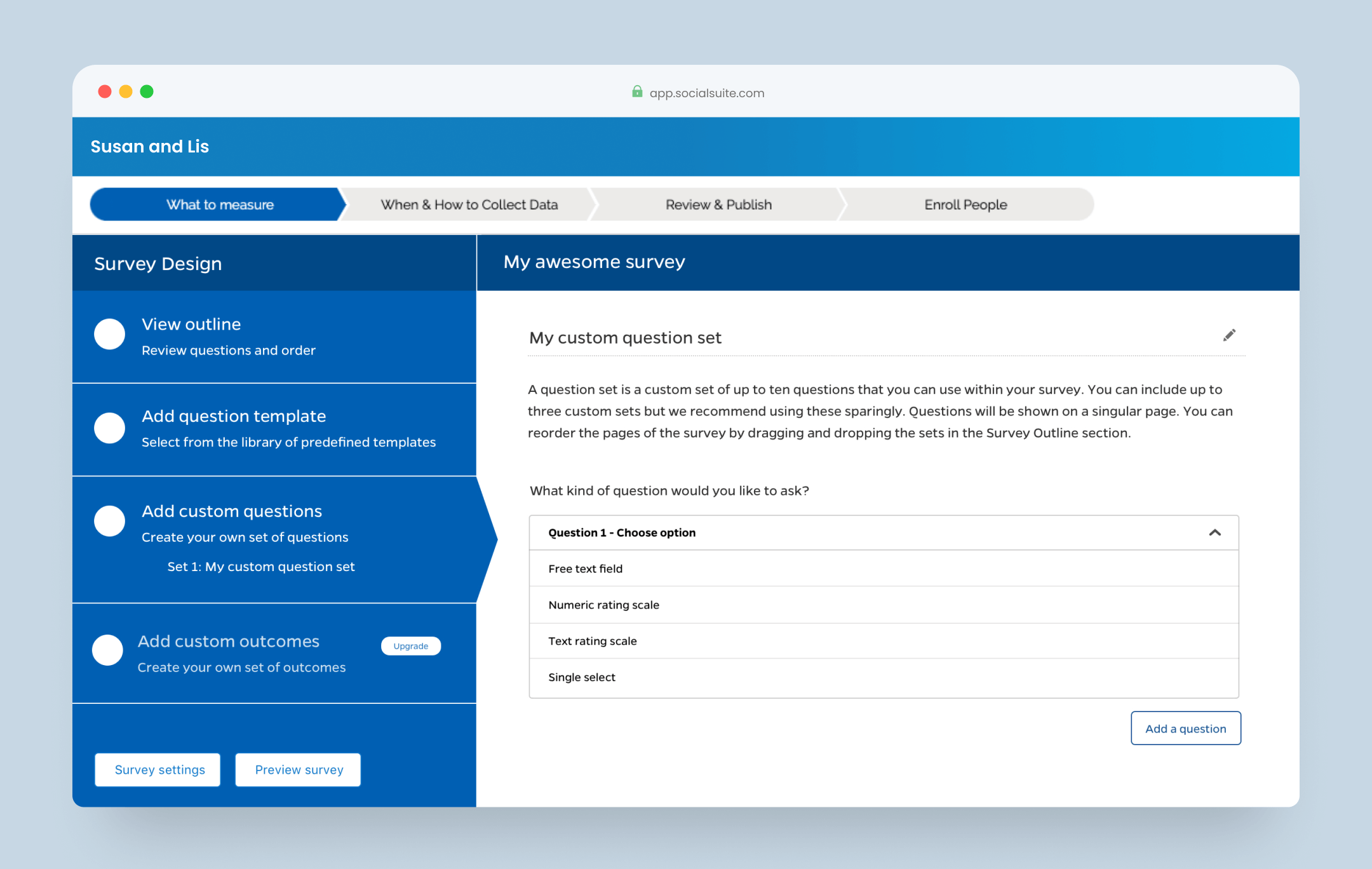

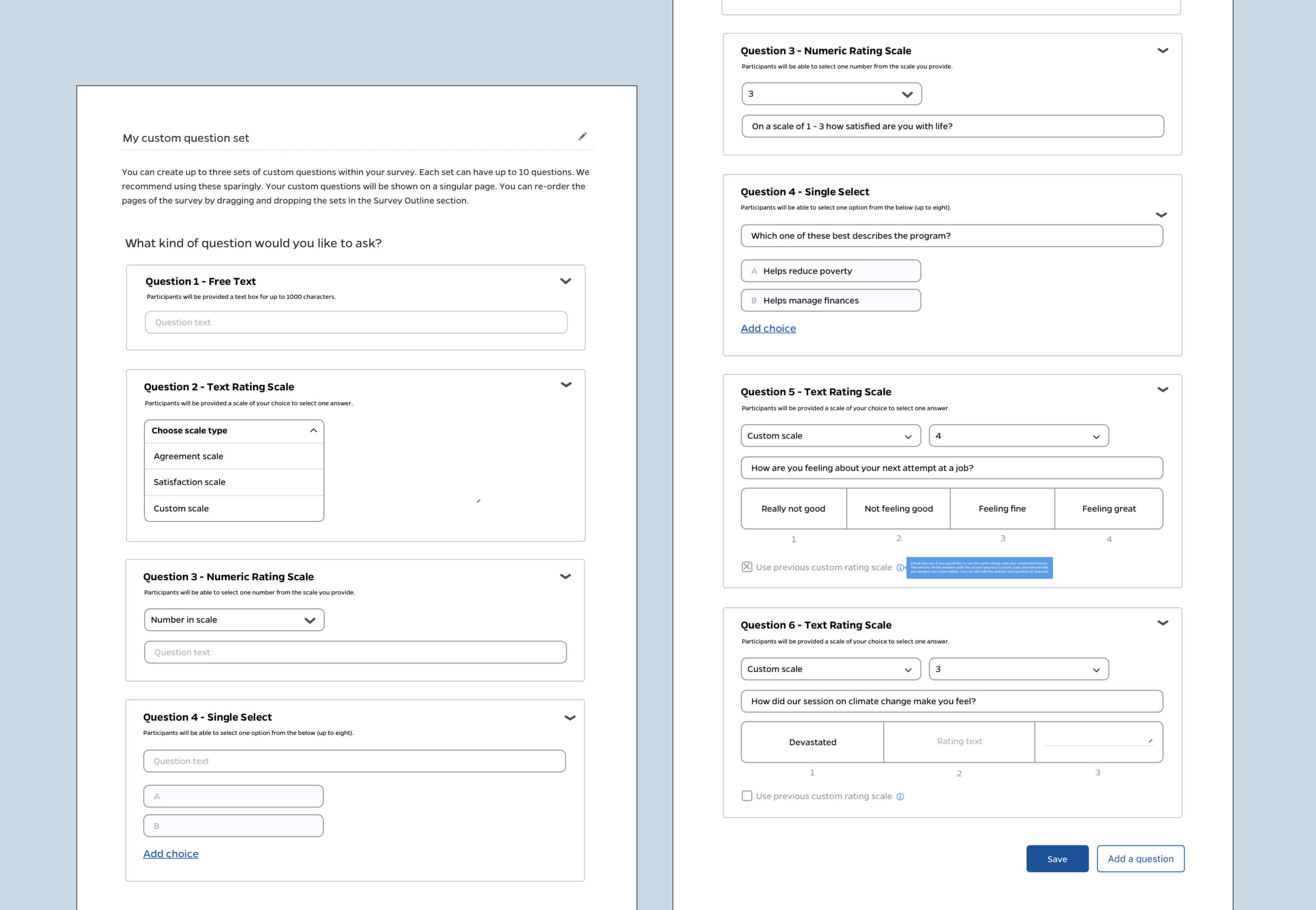

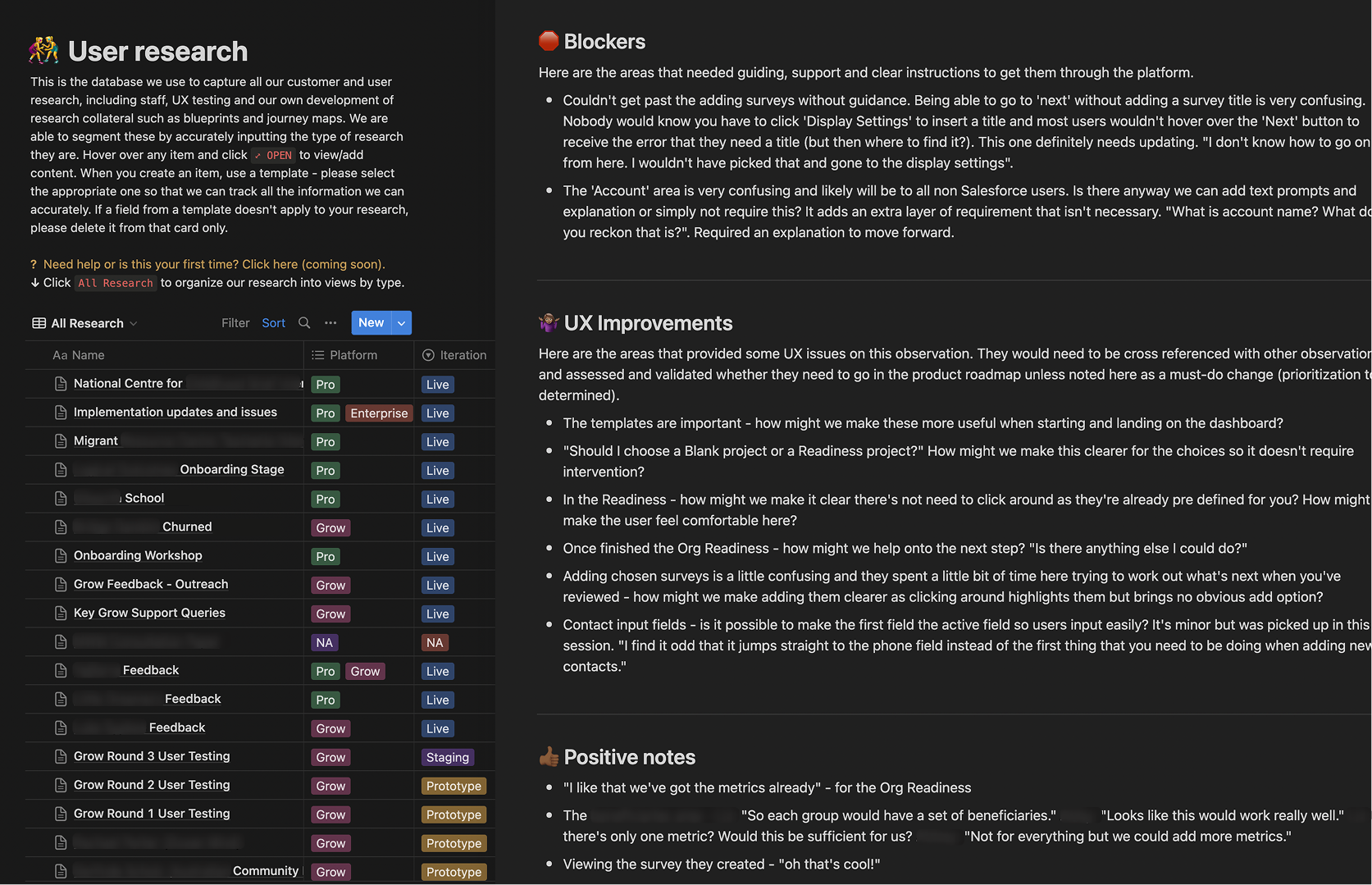

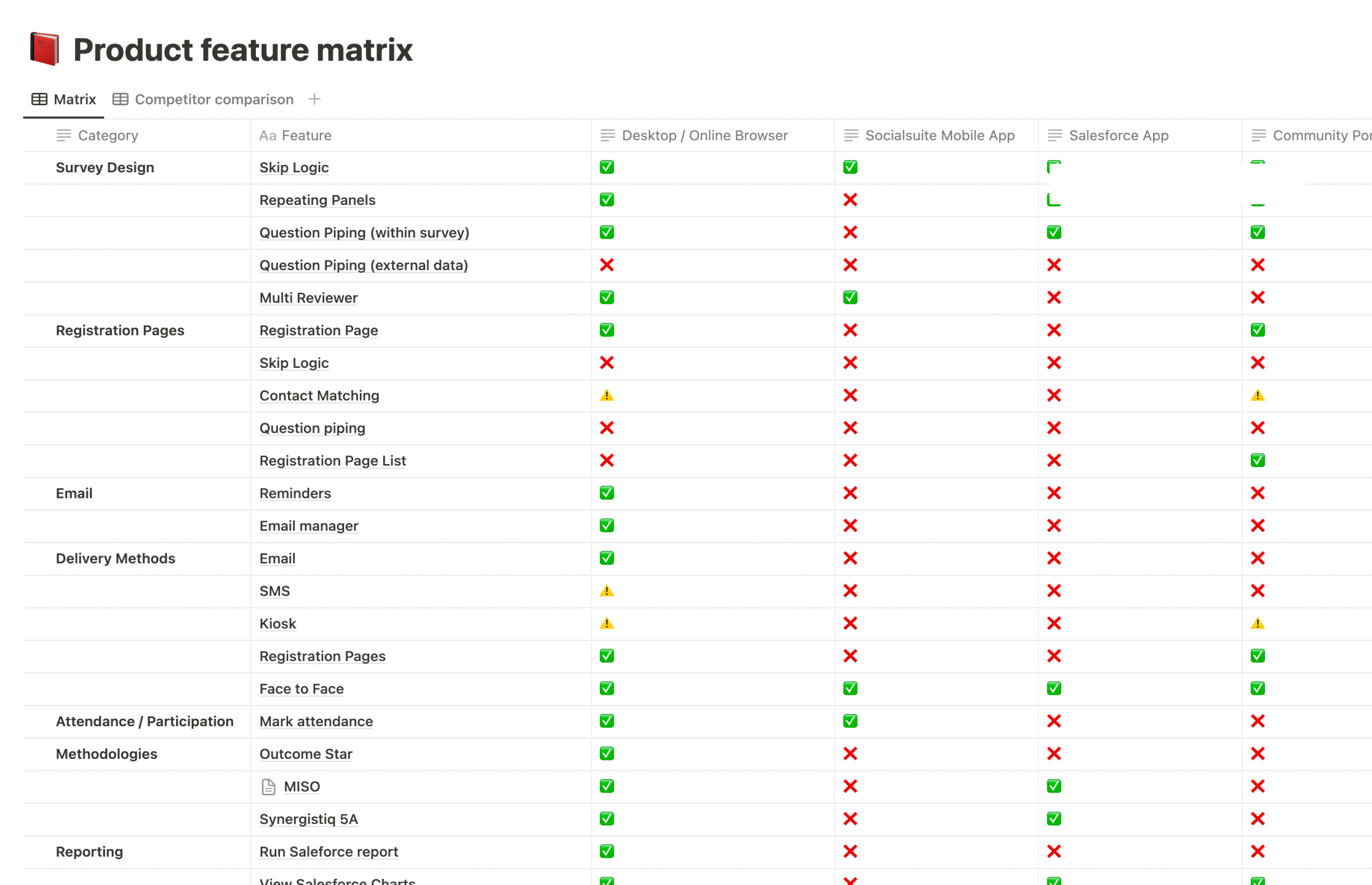

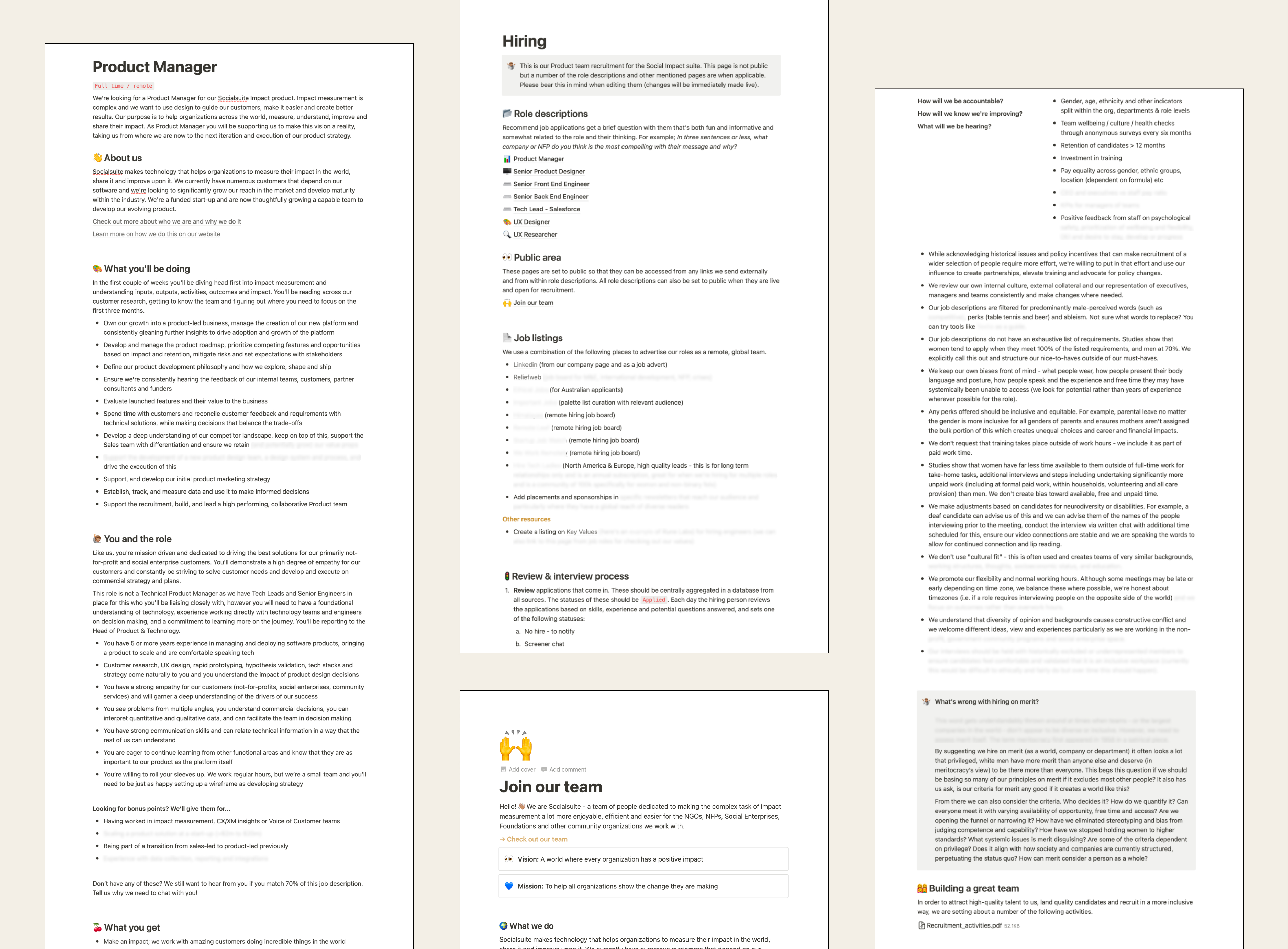

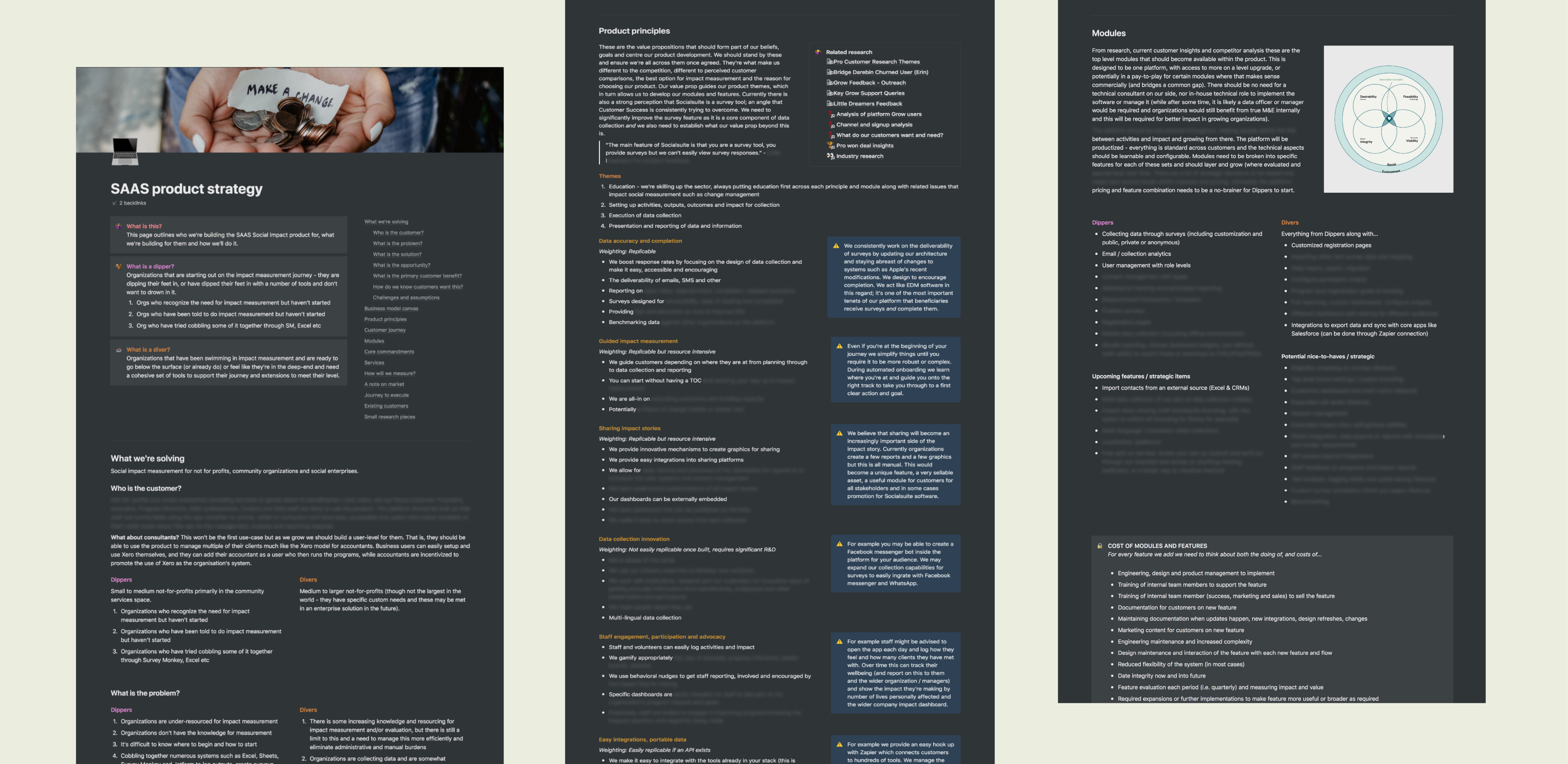

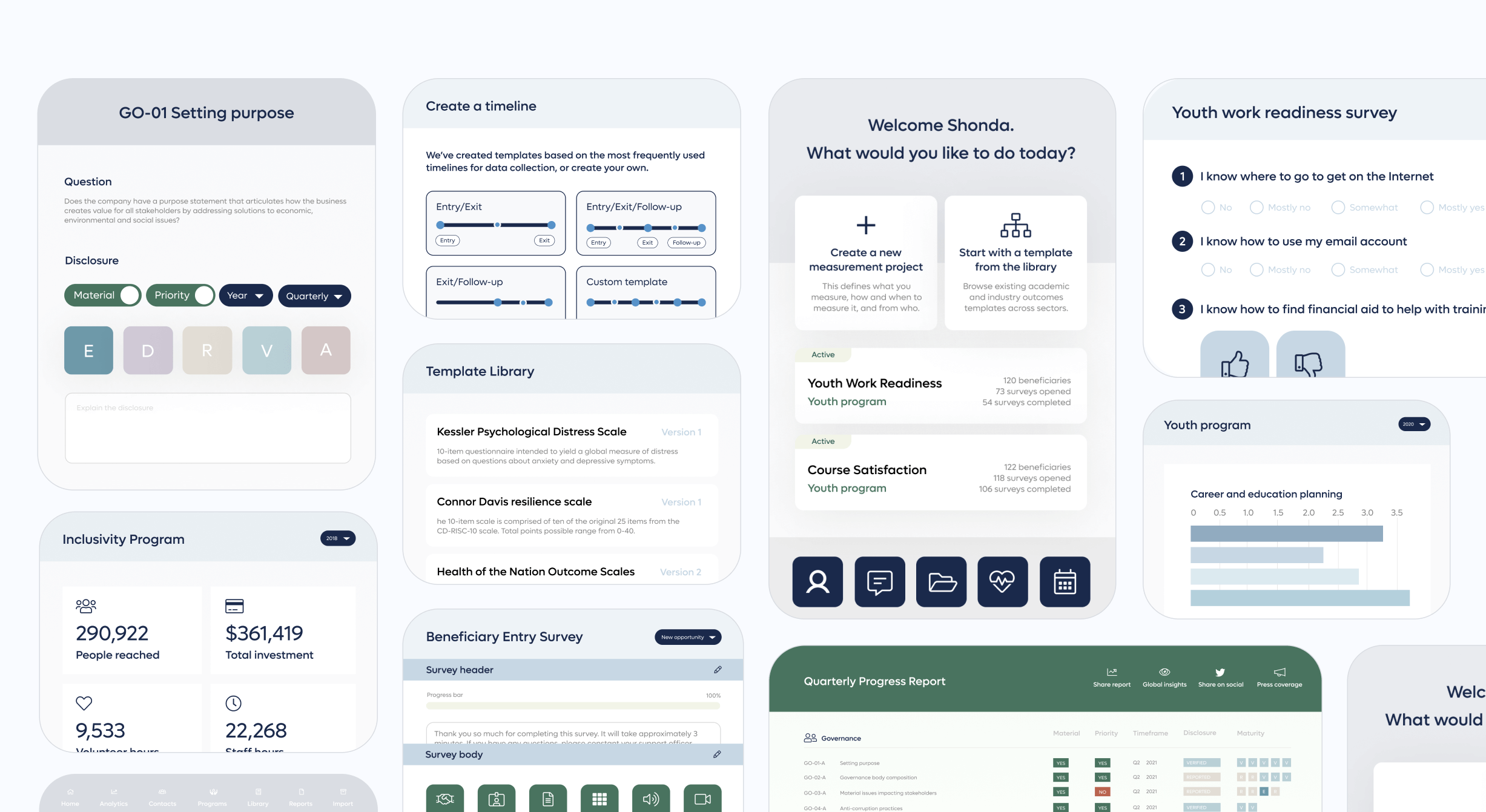

Like the more mature Pro version, this platform had also been built on Salesforce and the company envisioned an off-platform solution would likely be required over the coming years if the product was to grow. The engineering team had a plan for how we could strategically do this. We began with data collection as the easiest component to create new architecture and start with new data models. We could thus significantly improve value with a far wider and more applicable range of data collection options. With feedback and previous experience in beneficiary and field staff data, I was able to quickly scope out the application to allow for offline collection (with automated, manual and fallback online syncing), and useful methods that met multiple beneficiary and admin needs. I wireframed each stage of the application so we could review the processes with the company, test a prototype, and the development team could move ahead and structure. Eventually this would allow us to focus on more innovative data collection methods that were being considered.

In order to make sure we were aligned I also listed our goals and requirements. For example, see participants /in program/, for a cohort /parent/ and activity /child/ = Youth Mentoring > Mentor Training > Sydney March 2019. Additionally I drafted and worked with the teams to agree to core user stories, what was out of scope, and platform rules we needed.

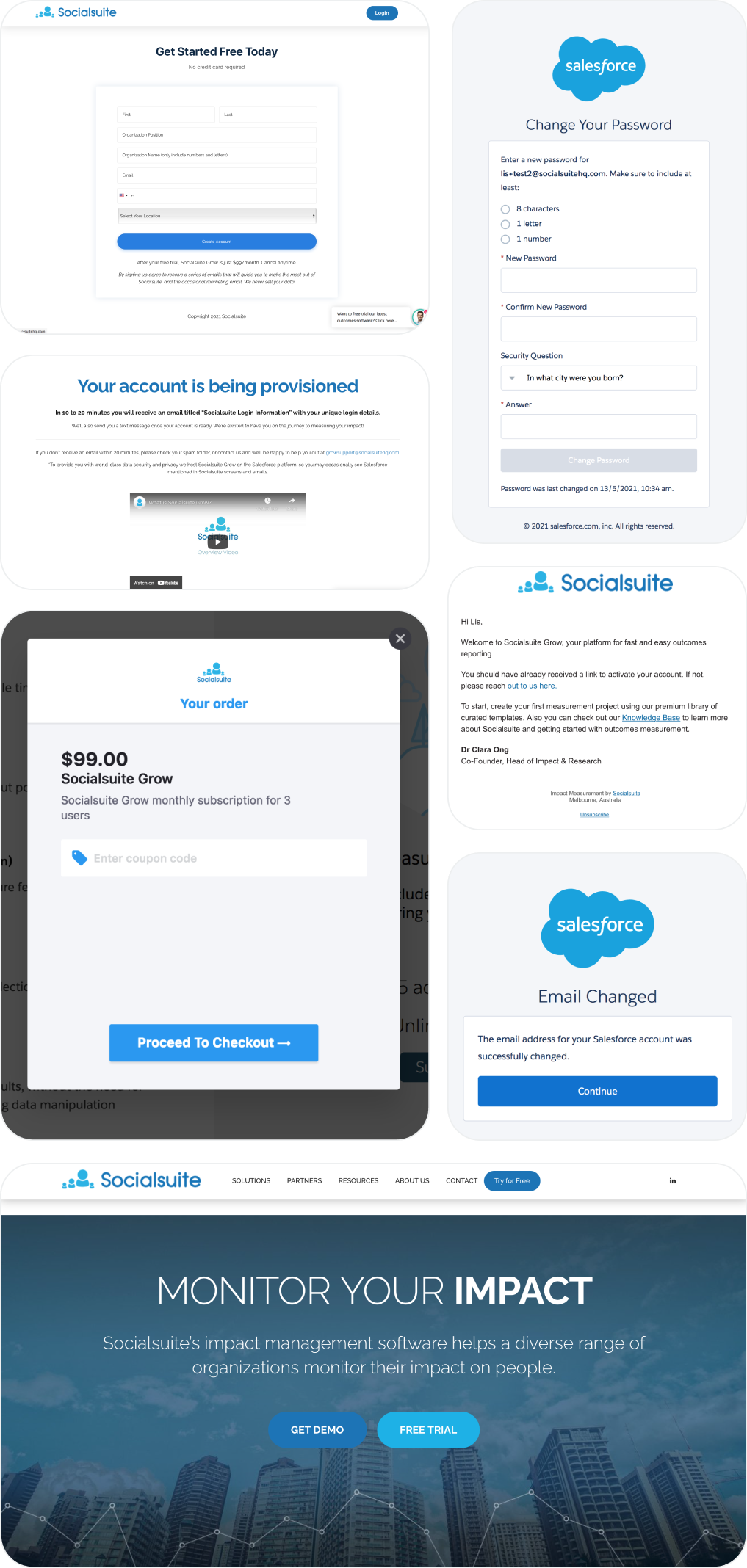

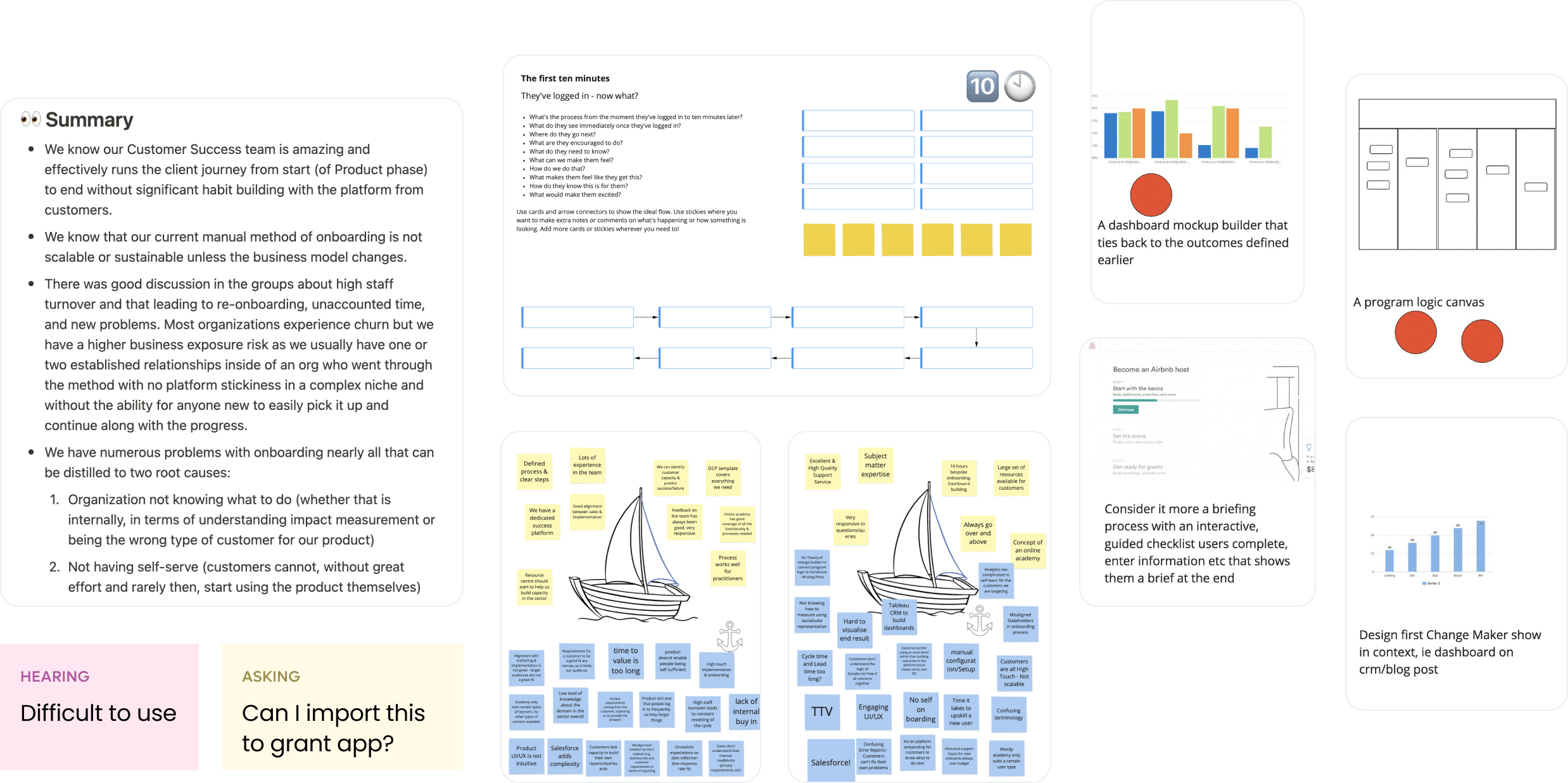

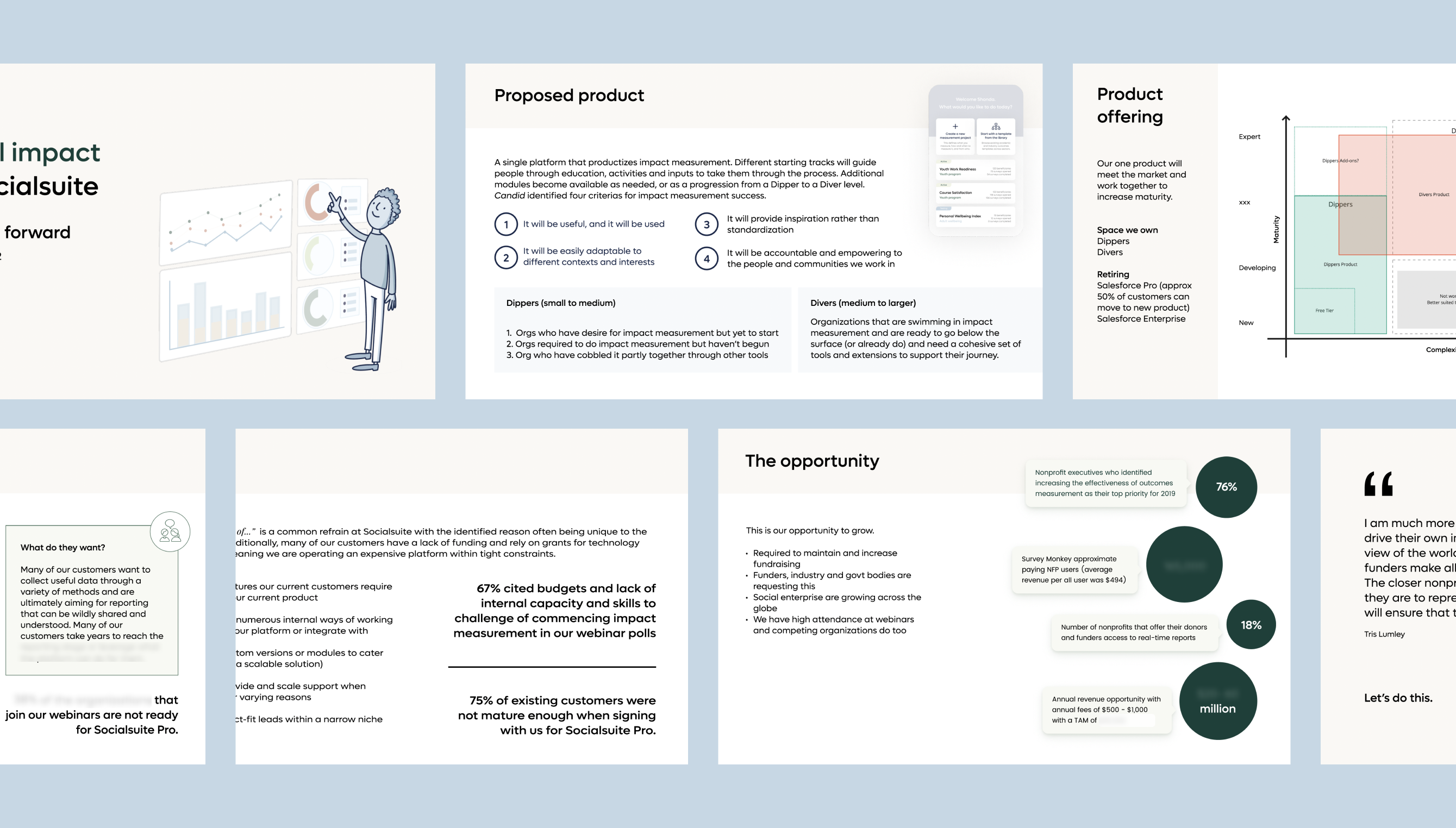

Even those comfortable with Salesforce took a couple of months to become comfortable with the Pro platform due to how much it needed to deviate away from native usage. How could we design based on needs instead?